Question: what’s better, gnome-terminal or konsole? Maybe xfce4-terminal? Answer: I don’t care, they just work. It’s not a terribly complicated program from a user experience point of view. Here’s what I need from a terminal emulator:

- To show the text

- To have tabs

- Optionally to have a graphical options menu (to change fonts)

- To not annoy me with bullshit

And all three listed above fit the criteria without sweat.

A couple of years ago I started using a mac for work. Quick opinion: it’s ok, but the advantages are really overstated in online discussions. But can its built-in terminal emulator pass these four criteria? Can any other terminal I could find online? Let’s find out.

Terminal.app

Like everything apple, on the first glance, it seems that all is ok. It does have some weird behaviors though. For example, I’m used to killing the shell to close the tab; here it only kills the shell, and then you need to close the tab manually. I’m used to getting a warning when accidentally closing a tab with a program active; here you get nothing, but can instead restore it with cmd-z (like is common in this DE).

Here’s how they fuck it up: there’s no support for 24-bit color. Come on, which year is it, we’re young and spoiled and colors are very foundational to our modern terminal experience! How carefully I created my vim colorscheme, and for what!

This is a story similar to the preinstalled coreutils on mac os: it works, but with weird usability, and doesn’t support the options you’re used to. Here are a couple more things: no clipboard escapes (only pbcopy), no commands to clear scrollback. Literally a couple, because it’s kind of inconsequential, hard to discover or google, and the lack of colors is the only missing thing I really care about. So I don’t use terminal.app.

Iterm2

Fun story: back around 2015 a friend of mine got themself a macbook after using linux, and became a complete fanboy. I found some of their quotes about the experience and translated them for you:

— And by the way, linux doesn’t even have iterm

— I couldn’t imagine that a terminal could be a piece of art

My asking for examples of the brilliance of iterm was left unanswered of course.

I also found my quotes of using iterm for the first time on their PC:

What the fuck is that blinking thing on top?

To this day, I don’t what it was.

This pretty much sums up my experience with iterm: it works, but it has a lot of weird small annoying bugs, and also a lot of useless functions enabled by default. For example:

- Pasting text with cmd-v will replace

\nwith a line break - After exiting sleep, the viewpoint will scroll one line up

- I accidentally pressed something and all instances of the letter “g” became highlighted, no idea how to exit it; had to kill the tab

- When an ssh connection drops, the iterm tab with it begins to beep each time I enter or exit it

- Hangs, somehow

- The dropdown animation is different on embedded and external displays: either sliding or easing in

- Hotkeys with alt don’t work in helix, with any behavior for alt set (could be either’s fault, but it works in konsole)

- A weird blue triangle shows against every fish prompt line, also visible in the scrollbar

- Closing alternate screen puts absolutely random lines from history right above the prompt line

- It was slow sometimes, but they mostly fixed it

- Dragging tabs around is incredibly finnicky: one wrong pixel and it becomes a split, or goes into the fucking doc. And the highlights when dragging it are incredibly unclear on which action will be performed

- Sometimes when the dropdown is hidden, its hitbox remains on top of other apps

If you permit me diagnosing by looking at zero of iterm’s code, I think its problem is that it has too many features. The rule of development is bigger the code base, the more bugs it can house. And the more unrelated functionality you add, the more opportunities for it to interact in the wrong ways. Just look at iterm’s settings page: 8 tabs, each with like 5 subtabs. That’s just too much to support. And the worst part is, I don’t need all of this shit! You’re spreading yourself too thin to capture each marginal user, so you lose the marginal one that wants the compact experience.

Despite all this, it’s the terminal I used the longest, and indeed the one I still use, nowadays because of its drop-down functionality. All those bugs are annoying, but not breaking. And it does keep getting better if ever slowly.

Kitty

Ha-ha, it’s incredibly ugly. The tabline by default looks like shit; all modal windows do too.

Also the configuration window doens’t open for some reason, so I considered it broken and deleted it. (Update: went back to make a screenshot and at least this problem seems to be fixed. Still ugly tho)

Still, I have to commend the author of kitty for trying out new things and trying to break the status quo. They popularized the idea that terminal should be able to display images, have clipboard access and other goodies. Nice work!

Alacritty

No tabs. I personally believe that tmux or screen or zellij are the wrong approach: why do I need two terminal emulators in my way to see one terminal window? It’s silly. Their case is not helped by tmux and screen having incredibly weird controls, bad defaults, and a bunch of bugs.

But again, alacritty is an important achievement because it’s the terminal that started the performance revolution. I remember I tried it out back when it first released, and my eyes opened to the fact that my terminal was actually laggy! Since then konsole has too upped their game and I don’t notice it anymore, so I have to thank alacritty devs for pushing the world in this direction.

Wezterm

The tabline is ugly, again. Fortunately it can be made better by switching to ascii mode for tabs, but then you lose the ability to drag them with the mouse? It makes some implementation sense, but not UX sense.

The stupidest default is using ligatures in the font. Who the hell thought this was a great idea for general text processing? It’s so incredibly bizzare, because some pairs of symbols now take only one column, leaving giant gaps in the middles of words. Look, this is just ugly! Do the devs even use this?

On top of that, it has rendering bugs. Numba one: moving the window from a high

DPI screen to a low DPI one breaks the fonts: they become weirdly thin and

improperly smoothed into pixels. Unfortunately this doesn’t happen every time,

and not with every font, and not even with every letter in a font. But often

enough.

Bug numba two: sometimes when exiting from sleep, on every key press, the

colors move from darker to lighter to darker and so on. Incredibly jarring. I

remember I had this bug in iterm some years ago on a friend’s computer. Yay for

macos consistency I guess.

Though I kind of liked the idea of configuring the terminal with lua: why not actually run scripts in an actual language in your config? I do it with vimscript and find it useful. And plus, a scripting language in the config allows for a natural extension into a plugin system; like vim, again.

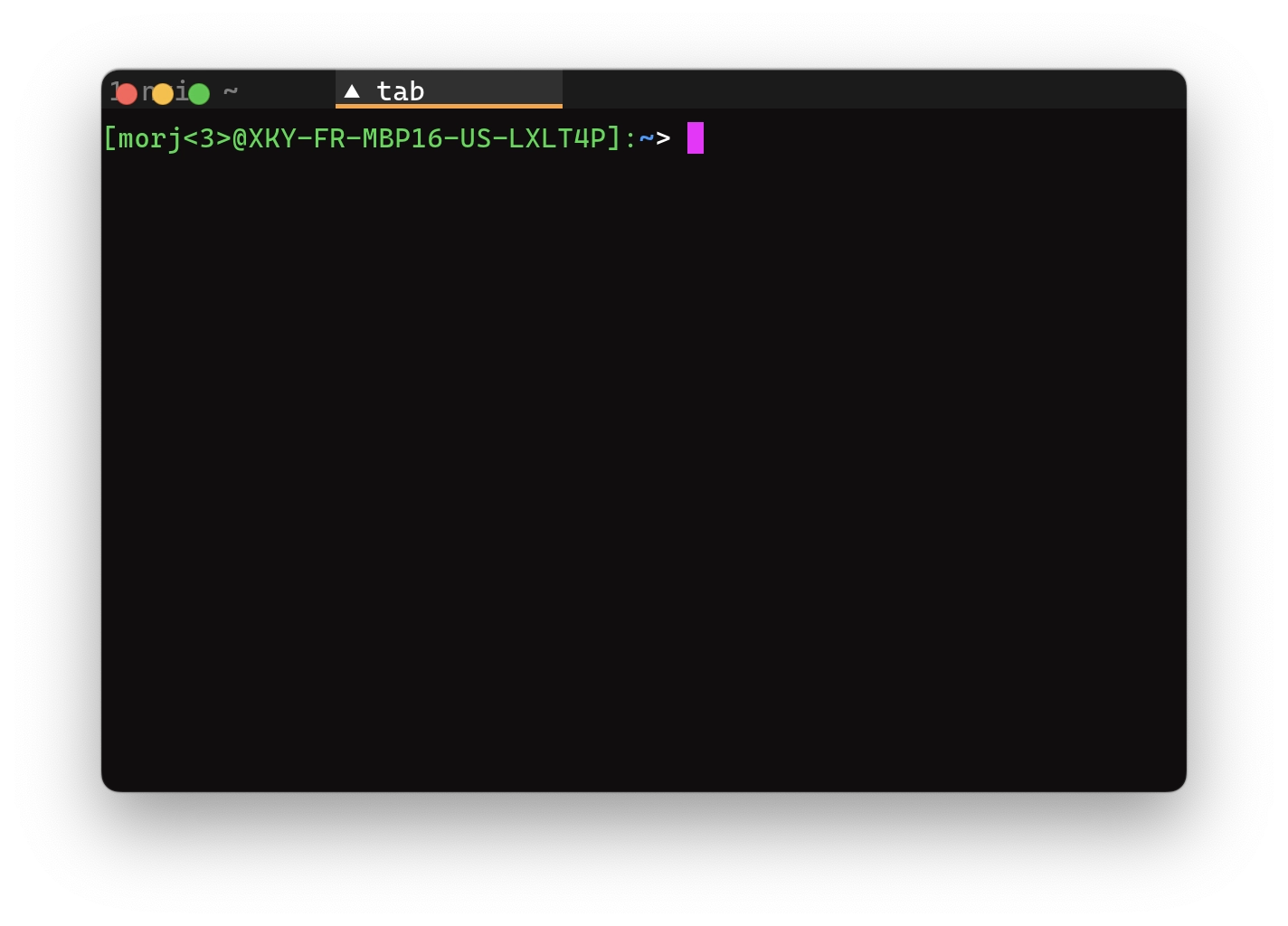

Rio

Continuing the theme of bad defaults, the default purple cursor color is ugly.

Continuing the theme of breaking bugs, nothing about tabs works correctly:

- When setting

window.mode = "maximized", creating a new tab will make the window maximized - Sometimes cmd-t creates windows instead of tabs

- Tabs are drawn in the window title bar, which is good, but they are drawn beneath window controls, which is huh

- Sometimes when moving window between screens, the tab bar disappears, and part of the scrollback gets drawn in the titlebar; beneath the window controls, again

What’s unfortunate is that rio was made by someone who thought exactly as me: they took alacritty and added everything that I wanted it to have. Unfortunately, just like I would have, they also made it buggy and unstable on mac. But I don’t blame them. Why? Well, because…

Konsole

Holy shit, they have a mac release?

Unfortunately, it’s also broken: no inputs involving the ctrl key work, they are lost somewhere before reaching the app - not even can I use them in the settings window.

This tells me that the problem with the terminals on mac is not terminals, but mac. Wow, a closed platform being hostile to developers? What a rare occurence! Next you’ll tell me they always break backwards compatability for important accessibility applications, oh wait. And that’s why all the kids online hate on megacorporations that control the world.

(Went back to make screenshots, and now some ctrl-hotkeys actually work! Will investigate this further, and maybe konsole will be the winner)

Hyper and tabby

Ha-ha-ha-ha-ha, electron-based terminals. What the fuck, guys. I have in my life had the misfortune of using windows-terminal, so I know that this is not even worth spending time installing it, when I was already complaining about performance problems in iterm.

I love that tabby at least is upfront about this:

Tabby is not a new shell or […]. Neither is it lightweight - if RAM usage is of importance, consider [alacritty and some others]

Warp

Holy hell, you don’t need an account to install it anymore.

Warp is a little bit cool. A couple of years ago they raised a lot of hype about reinventing the terminal and doing it right this time. What they released was a closed-source thing which required an account to download and use, and came preinstalled with AI integrations. But did they actually revolutionize the console experience?

Well what they did that I can see with my own eyes first, is make prompt be

controlled by the terminal, not by the shell. Pretty neat I guess, except it

has the zoomer’s default of including a bunch of random bullshit into prompt

like the git branch and some random time? I’m young, or at least I think I am,

but I’m with the boomers on this: the prompt should have a minimum of

interactive elements; a prompt with just $ is just ok, but you can add some

small static info. Fortunately I can configure it to just show my regular fish

prompt, which I immediately did.

What else did they revolutionize, uhhhhhhh

Pressing ctrl-space, which in my vim is bound to change keymap, instead opens

some useless AI window. Innovations, man, they do be and how.

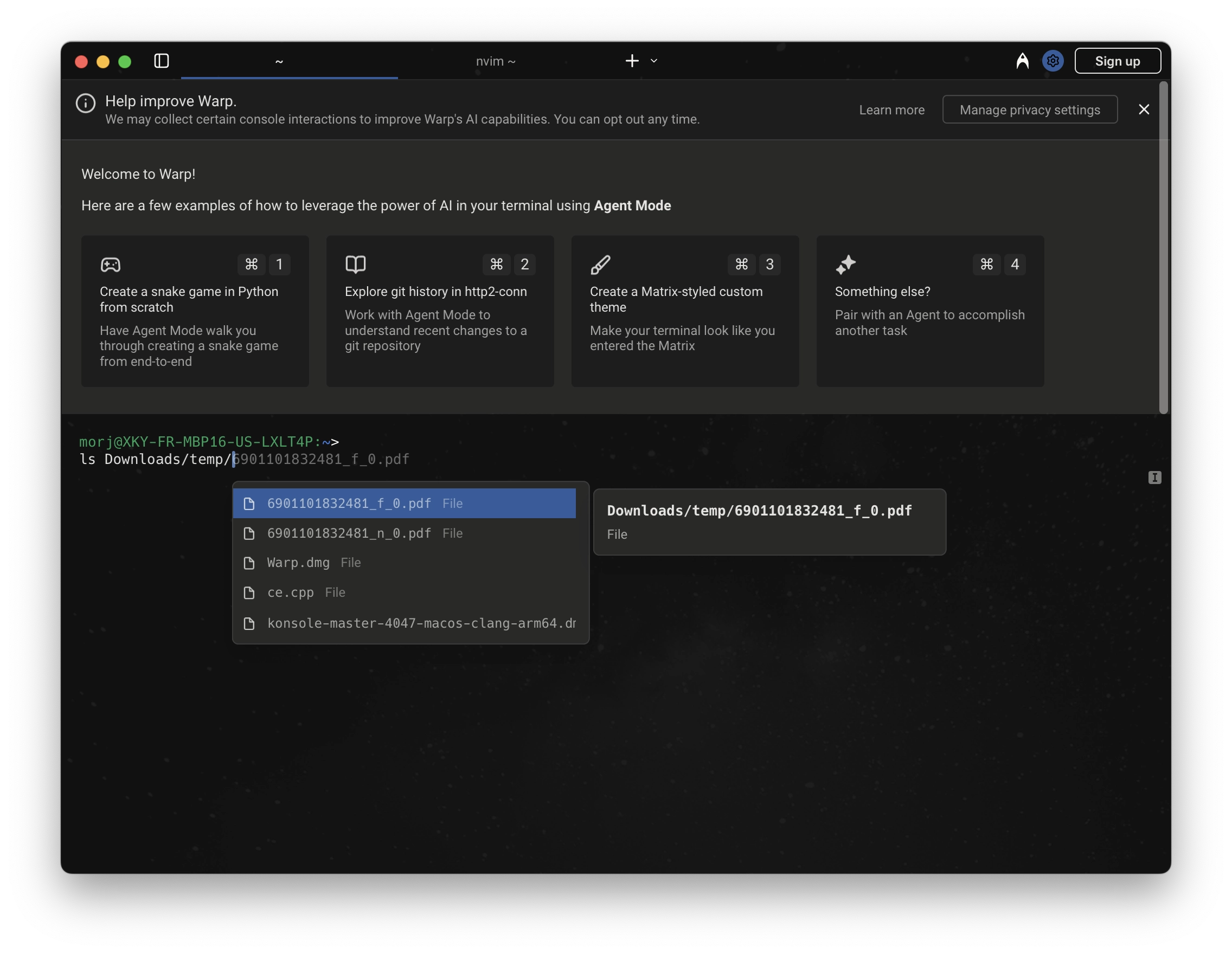

They made it so shell completions appear in a special drop-down window, like an IDE! That’s cool. This drop-down is exactly 6 lines long, if you want to see more be sure to move your hand to the arrow keys and press down a bunch, you stupid IDE using motherfucker. I hate completion drop-downs, but that’s a story for another time.

Completions also have an incredibly annoying bug: go to a directory that has two subdirs: “dist” and “dist-newstyle” for example. Write “d” and press tab to complete:

+----------------+

| dist/ |

| dist-newstyle/ |

+----------------+Then write “ist/” to finish the first one and press tab again to try and complete the files inside it. Instead what you get is a dropdown to choose between:

+-------+

| dist/ |

| dist/ |

+-------+This annoyed me so incredibly much that immediately after seeing it 3 times I removed warp, and can no longer comment on how revolutionizing it is to out collective personal computing experience.

Which is a bit sad, because reading some of their blogposts gave me good idea how to revolutionize shells; I wanted to see something cool that warp guys have done. I’m still working on those shell ideas, so stay tuned, and maybe subscribe for updates?

As you noticed, in this post I try to be nice to the open-source devs and find something good to say about their work. I reflexively did for warp here as well, but warp is closed source, so I can with clear conciousness tell them: go fuck yourselves with the AI bullshit! I’m not 100% against ai, in fact my own intelligence is artificial as well, but just look at this garbage in my face in the screenshot. This is my terminal, not your advertizement space.

Ghostty

Listen guys, how did we fall for the hype for a god damned terminal? How could Mitchell generate so much of it? Should every new project start in a closed beta to generate interest, and then still release only 80% baked?

But ghostty is pretty good honestly. One thing they did well is make a great

default theme; incredibly pretty, kitty devs should learn from it.

It’s good, so I’ll start with a bunch of bad points to leave a better

impression later.

While the default theme is pretty, the cursor blinks too fucking fast. It’s a small nitpick, but while trying to configure this I realized it doesn’t have a GUI configuration. Argh, so much for our 21st century experience.

Then I go look at the config documentation, and it doesn’t list the default

values for option.

Then I try to set the cursor option, and it just doesn’t work: re-reading

config, and restarting the terminal does nothing. But then, restarting the

terminal and re-reading the config (in this sequence) magically works? 80%

bakedness in plain sight.

Fortunately I think they will fix those one day. Here’s what they are never going to fix: their own stupid vision for how a terminal should operate. Here’s one part of it: shell integrations. Disregard for a time that they are incredibly buggy; why do they exist at all? Konsole nor iterm have those and shells work just fine! There exist already customization points for shells to set the cursor shape, and prompt location, cwd, and whatever else you might want - you don’t need an explicit integration for fish only! If tomorrow I create my own shell, do I need to send a patch to ghostty to support it? No, that’s silly and not going to get accepted.

But also, what if I use multiple shells? I use fish locally, but a lot of my remote servers use bash or csh - do I need to change and re-read the config before every ssh call? Or when I wanted to try out nushell, I ran it from fish inside ghostty, and it got completely fucked up: the cursor is misbehaving in both nushell and in vim started from it, and any keys involving ctrl just don’t work at all.

Here’s another part of the vision: keys don’t behave like you expect them to. Open vim and press ctrl-[ in insert mode - instead of going to normal mode you’ll go to normal mode and paste some text. Why? Usually ctrl-[ behaves exactly the same as pressing escape - because of some quirks of key handling in terminals of the 60-s. Ghostty authors decided to fix this: instead of sending ambiguous ascii codes, some keys instead send a full escape sequence listing all modifiers. Honestly, this is a good idea! But no program existing today can recognize those gosh-darned sequences! Why am I again getting caught in someone’s API revolution, argh.

What makes the above point harder to complain about, is that while I was writing this article it was reverted: ctrl+keys now send the regular ascii bytes again. Yay compatability! Boo your adherence to the principles.

What makes the above point easier to complain about: when you try to improve input, check that you are doing it right. When using a uk_ua layout for example, and pressing ctrl-ц, I expect it to behave exactly the same as ctrl-w, and not send a literal ctrl-ц as an escape sequence, which, again, no program knows how to handle. Nor should it know: localized hotkeys should be transparent to the running program, because you won’t put a milliard lines of localization into libreadline or rustyline or haskeline. If your terminal wants to do localization, then don’t make other programs pick up the work.

Ok, now to the good points. It just works! No big bugs, no annoyances, just nice performance, as it should be! I like that it has less useless garbage than iterm. So for now, I’m using ghostty; not because it’s a peak of terminal design, just that on mac it’s not the pit either.

A side note on terminfo

Terminfo is bullshit. Now that I use ghostty, I can feel it everywhere: ssh to

an old ubuntu, and even backspace doesn’t work somehow, because this ubuntu’s

terminfo database obviously doesn’t have an entry for xterm-ghostty - the

ghostty was just created and this ubuntu is from 2004 or earlier. It’s stupid!

It’s so stupid that iterm rightly just sets xterm-256color and makes it work.

It’s just bizzare that in 2026 we have all decided to avoid using user-agents

in the web, but it’s something people still use by default here.

Conclusion

Mac OS is an ass and hostile to developers, so all mac os terminal apps are flawed. Some of them almost work, and I thank the developers who make them so. There is no god and even the OSS devs are not gods,

Addendum: a quick rundown of windows terminal apps

There was a time in my life when I had to use windows for work, and it was a lot worse than mac. In all aspects, but also including the terminals.

- cmd.exe - hahahahahha, it’s shit. And slow. And no tabs. It’s such a bizzare experience, I recommend it

- Windows Terminal © ® ™ - well it has tabs.. and it’s somehow even slower

- cmder - has tabs, has a lot of bugs, crashes. Keeps a lot of bizzareness from cmd.exe

Now how did I handle it? I ran windows in a virtual machine, set up its SSH server, connected to ssh from the linux host and used konsole. This is the best option I found.